TinyML Is Simpler (And More Useful) Than It Seems

- Charles Edge

- Nov 12, 2021

- 2 min read

Most of our projects these days involve machine learning in some way. And writing these tools usually starts with, let’s say importing TensorFlow, numpy, Matplotlib, and maybe two or three other libraries into Python. That takes time every time it’s run (even as a micro-service out in a Lambda or Google Cloud Function).

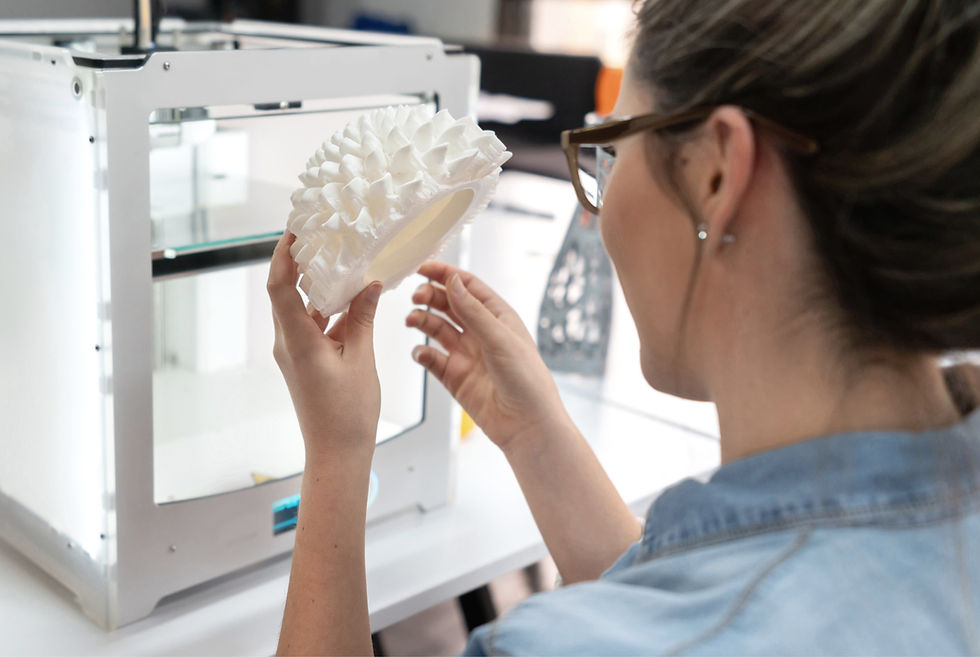

But let’s say we’re using an Arduino with a simple camera to do image recognition. Or control a fan based on a number of inputs being crunched by some SVM model. Well, if we import all this extra cruft every single time something runs, we’re going to destroy the battery the Arduino is plugged into, or it's going to take too long to process to be useful because each transaction comes at an unnecessary cost. Or if we transfer that data to a remote micro-service we're using network interfaces and suffering from latency.

Now let’s say we use Tensorflow Lite for Microcontrollers and effectively convert our work into a C file for our models rather than a big old Jupyter notebook like we might require for more expansive projects. Now we can do this one small image recognition task by importing our existing work and converting it. We’ve also used CoreML to import our models from TensorFlow so we can, as an example, perform complicated tasks on an iPhone to keep data on the phone rather than swapping it back and forth with a cloud controller, which makes Apple’s privacy policies happy.

Now, let's say we have a huge neural network doing deep learning to automatically tag posts or run sentiment analysis on huge troves of data. Those need to stay in an Apache Spark or some other system, doing their thing. But we can apply some of these basic concepts from a Tensorflow Lite or a CoreML. Moving as much of the workload off to an edge device as possible gives us a little less telemetry but reduces our hosting costs, likely improves speed, and makes for happier users. And further, we can often take some of these workloads that need to remain cloud-hosted for some reason or another and compile them into a C or C++ from a tool like Tensorflow Lite and get the benefits of running as a compiled artifact (although arguably we're trading a little complexity to our devops so maybe not cost justified until it becomes far cheaper at scale).

In general, anyone working in the machine learning space, even for small projects, needs to check out some of what's happening in the TinyML movement. After all, every modern device with an ARM chip has multiple cores of the CPU dedicated to machine learning. This means reading up on tools like Tensorflow Lite, PyTorch Mobile, Apple's CoreML and maybe picking up a sub-$100 Arduino type of computer. Maybe finding a cool model on the githubs, customizing it, and loading it into one of these frameworks.

The more downmarket machine learning goes, the more software is eating the world, but also the more these devices can augment our human intellects!

Comments